Auto Cleanplate for Nuke

The original version of this article can be viewed here

I was recently watching a demo of the Remove tool in Mocha Pro, which is a very powerful way of cleaning a foreground object out of the background of your plate. My first thought was “I wonder if could build this in Nuke” and about 2 minutes later had figured out the technique I’m about to demonstrate. It’s a bit hacky and nowhere near as powerful as the Mocha tool, but gives us some of the same basic functionality.

The common method of generating a cleanplate (if one hasn’t been shot) is to stabilise your shot, then take still frames from various frames in the footage where areas of the background are revealed and paint them together to remove the foreground.

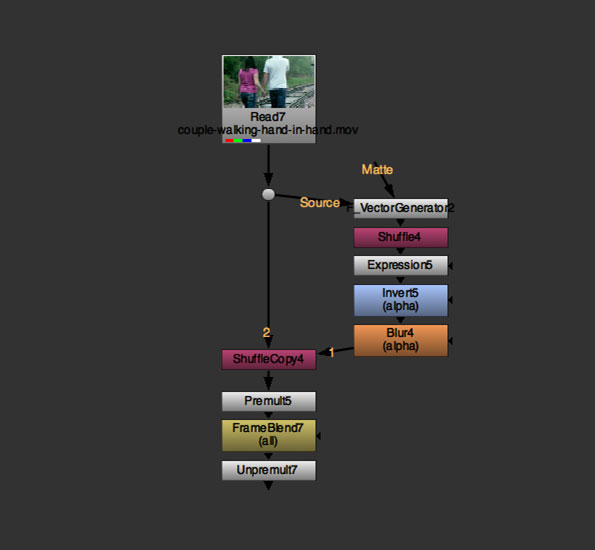

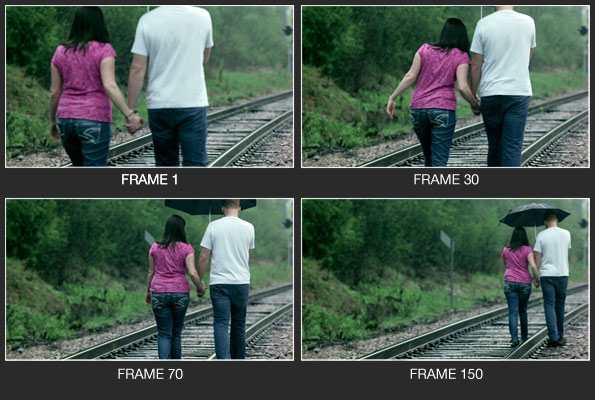

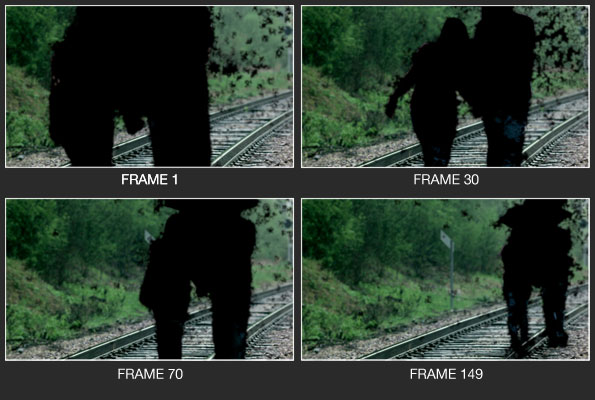

Let’s say we have a shot like the one below. There are parts of the background that are only ever seen for a few frames in the gap between the walking couple’s arms.

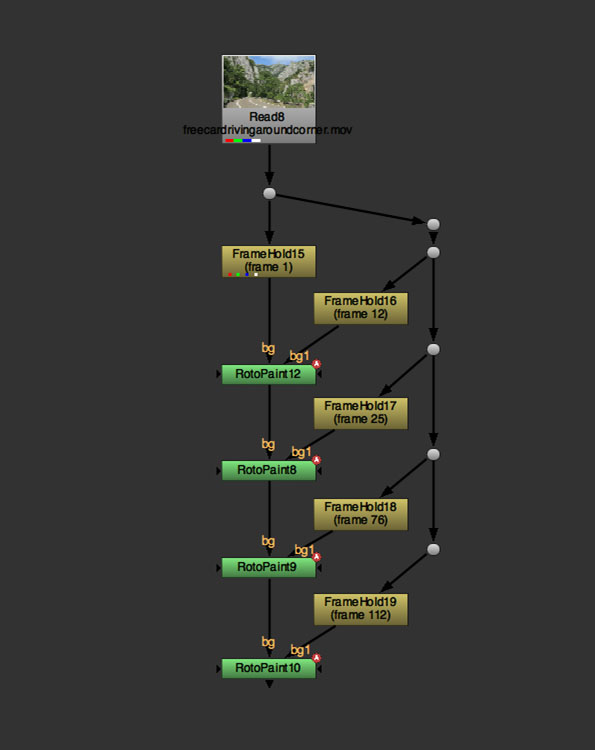

This would involve a lot of FrameHold nodes and painting through small sections at a time. The node tree below is the kind of thing you would typically end up with.

So how can I make this quicker and easier? The idea I came up with involves throwing a garbage matte around our foreground subject for the length of the shot. This doesn’t need to be a tight roto, so it takes me 5 or 10 minutes to do the 150 frames.

I then invert the alpha and premultiply the image.

The next step is to do a FrameBlend over the duration of the shot.

What we are left with is streaks of black where our foreground subject has moved through the shot. But if we look at our frame blended alpha channel we see that the transparency information is there in the streaks.

So the final step is to add un Unpremultiply, which immediately fills in the transparent areas, and an instant cleanplate (almost).

However, we are left with a black hole in our image – this is where there is no transparency information (i.e. an alpha value of 0), which means that this part of the background was never revealed at any point in the length of the shot.

I remedy this by applying a Blur node to our alpha before it’s premultiplied. As I dial up the blur amount our black hole recedes until it’s gone. This leaves us with a small artefact of our foreground, but we can easily paint this out the old fashioned way.

The obvious limitations are that because our background is blended together over many frames, it works best with a locked off camera and a background that doesn’t move much (such as wind-blown trees). Otherwise you need a rock solid stabilisation and any camera movement that creates parallax in the image is also a problem. On the plus side, the frame blending of the image effectively removes all grain from shot, so our cleanplate comes de-grained for free.

This seemed like such a simple method that I couldn’t believe it wasn’t already established. A quick Google search for “auto cleanplate Nuke” found this video by WACOMalt who had independently come up with the exact same method (he was also inspired by the Remove tool in Mocha Pro). He gives a great description of the process.

AUTOMATING THE PROCESS

So my next thought was “How can I improve this further?”. Sure – it’s super quick to throw a garbage matte roto around your foreground, but what if there was a way of completely automating this process?

A few minutes more thinking and I had figured it out. I just needed a way of creating the garbage matte that didn’t involve hand drawing it.

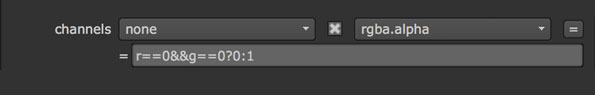

In NukeX there is the Furness node F_VectorGenerator. This uses optical flow to create a vector channel describing the motion in the image. If we have stabilized our plate then in theory the only thing moving in the image should be our foreground that we want to remove.

So I branch off a F_VectorGenerator node from our plate and then Shuffle the vector channel into the RGB channel.

We can see the X and Y motion of the image represented as R and G colour values. But what happened on frame 150? Well, because it is our last frame, there is no next frame to compare it to in order to calculate motion, so the motion vector ends up without any information i.e. blank. I will remedy this by using 149 as our last frame to analyse.

I then add an Expression node takes any value in the red or green channel that isn’t 0 (so any positive or negative number) and changes the alpha channel to a value of 1. Now anywhere that is moving should have an alpha pixel value of 1, whilst anywhere not moving (i.e. the background) has an alpha pixel value of 0.

I shuffle this back in to use as our alpha channel and premultiply. There are some blotchy artefacts from the motion vectors – these can be reduced by playing with the “Vector Detail” and “Smoothness” settings in the F_VectorGenerator. If we do have some artefacts left that change frame by frame, it shouldn’t matter as they will get blended out by the rest of the process.

Here is the final result after the FrameBlend and Unpremultiply. Amazingly impressive considering it took absolutely no effort to create. Again we have a few artefacts where the background was never revealed that we can easily paint out.

ADDITIONAL CONSIDERATIONS

Where this method has problems is when our foreground does not continuously move – if any part of the walking couple stops moving for a few frames then the F_VectorGenerator can no longer distinguish it in order to be masked out.

However, the main downside to this is that it gets very heavy. The optical flow used in the F_VectorGenerator is pretty processor intensive, and then the FrameBlend is asking Nuke to calculate around 150 frames of it at once. So to generate our single frame takes time comparable to rendering the entire frame range. But considering that on my Mac laptop, the cleanplate took 2-3 minutes to render, this is still by far the quickest way to go. We would just Write out the generated frame as a pre-comp once it is complete.

I tried this method again on this time-lapse shot of people walking in front of the Lincoln memorial. Different parts of the steps are revealed in plenty of separate frames, but to paint out the people using FrameHolds would be a pain – to roto them would be an even bigger pain.

But a couple of minutes processing time in Nuke generates the frame below. Again – there are some ghosting artefacts caused by people who do not move throughout the shot, but this has gotten us the vast majority of the work done for nothing.

THE NUKE NODE

I wrapped all of this up in a gizmo, reducing the settings to the minimum needed.

You can the source code here on GitHub

- over 1,000 free tools for The Foundry's Nuke

- over 1,000 free tools for The Foundry's Nuke

Comments

Anyway, well done!

Thank you so much Richard for sharing..

I am learning !!!

We just released another new tool for creating auto cleanplates. We already used it succesfully in some feature film productions.

Maybe it is an option, too. Just take a look at our webpage:

www.keller.io/superpose

cheers

grat technique, but I didn't had the chance to use it yet..

I was wondering: what about using the Difference node instead of the F_VectorGenerat or? You don't need NukeX, no need to shuffle to the alpha channel and should be less heavy...

You would experience a black alpha on the first frame.

What do you think?

RSS feed for comments to this post